|

|||

|

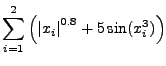

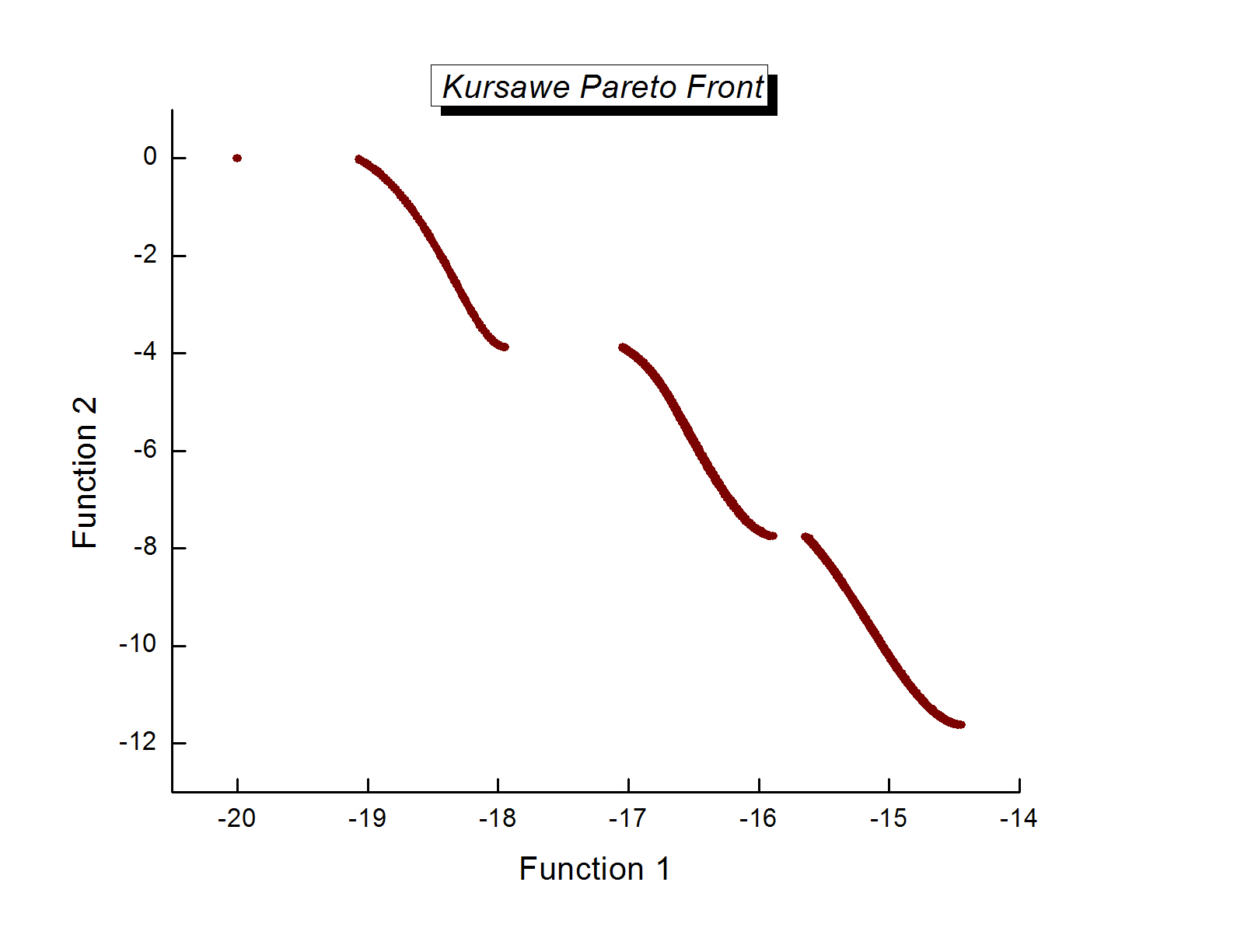

Kursawe Pareto Front (data file)

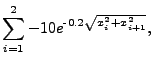

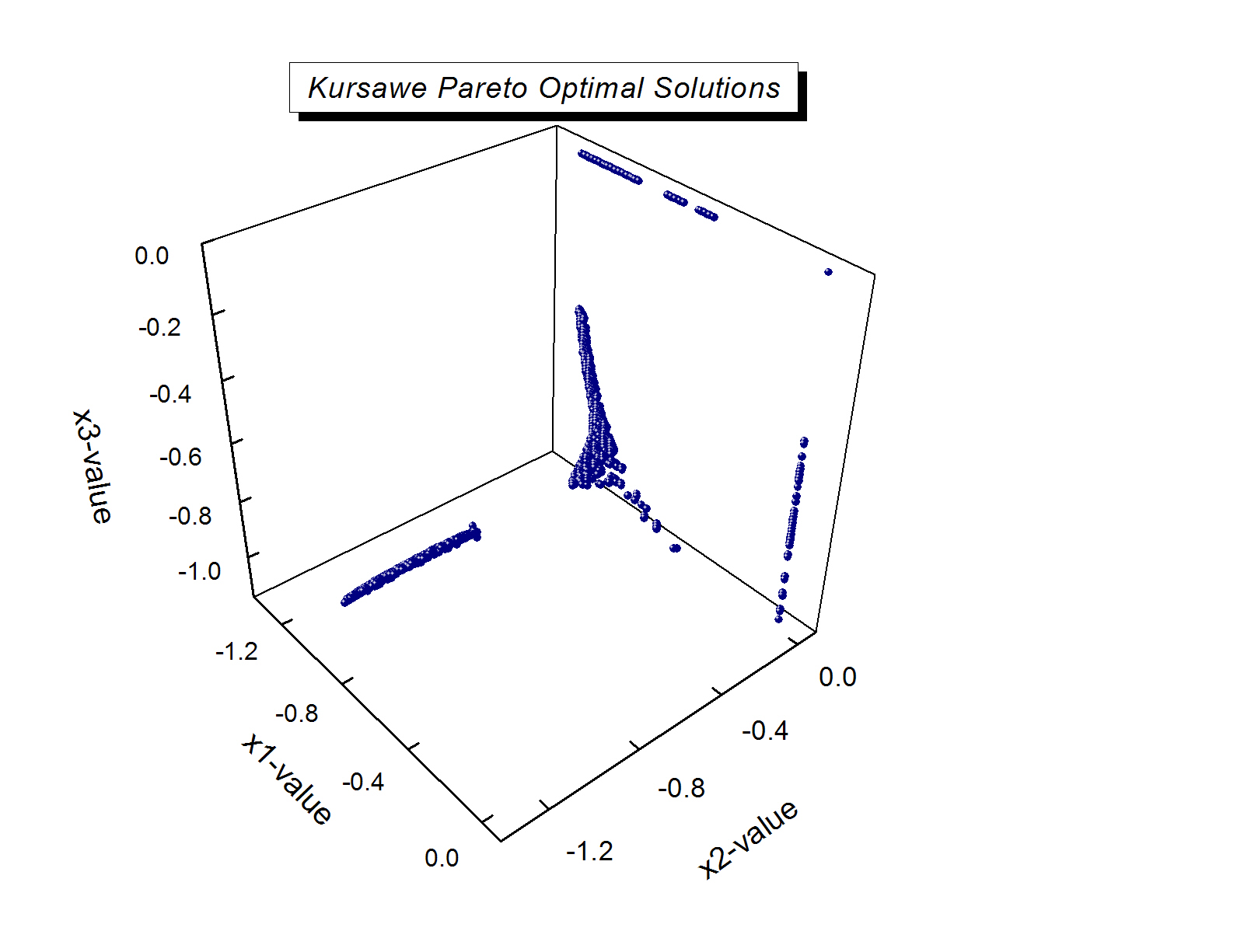

| KURSAWE | ||||||||||||||||||

| Proposed by Kursawe

[4].; |

Minimize

|

|

||||||||||||||||

Kursawe Pareto Front (data file) |

||||||||||||||||||

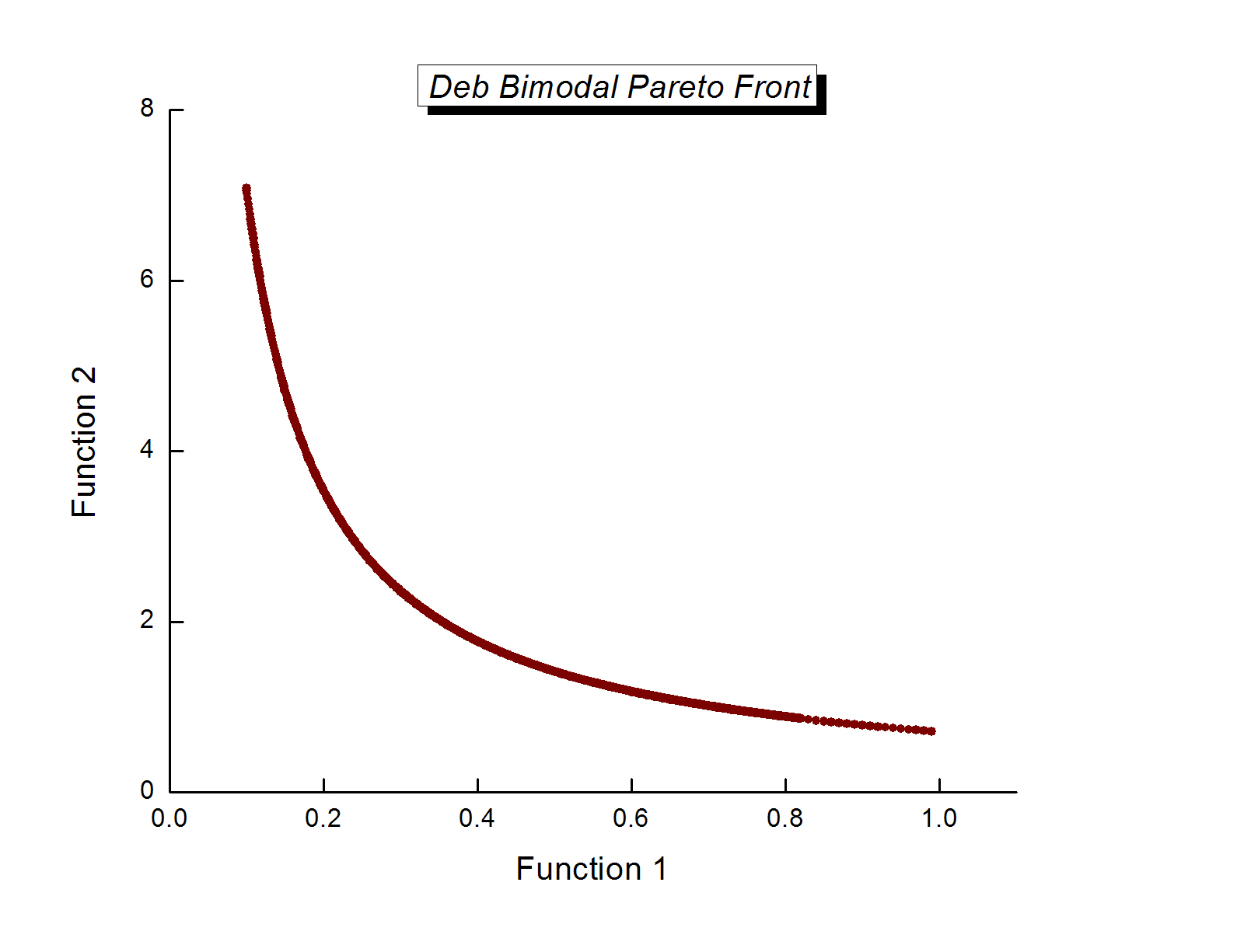

| DEB Bimodal | ||||||||||||||||||||||

| Proposed by Deb [1].; |

Minimize |

|

||||||||||||||||||||

Deb Pareto Front (data file) |

||||||||||||||||||||||

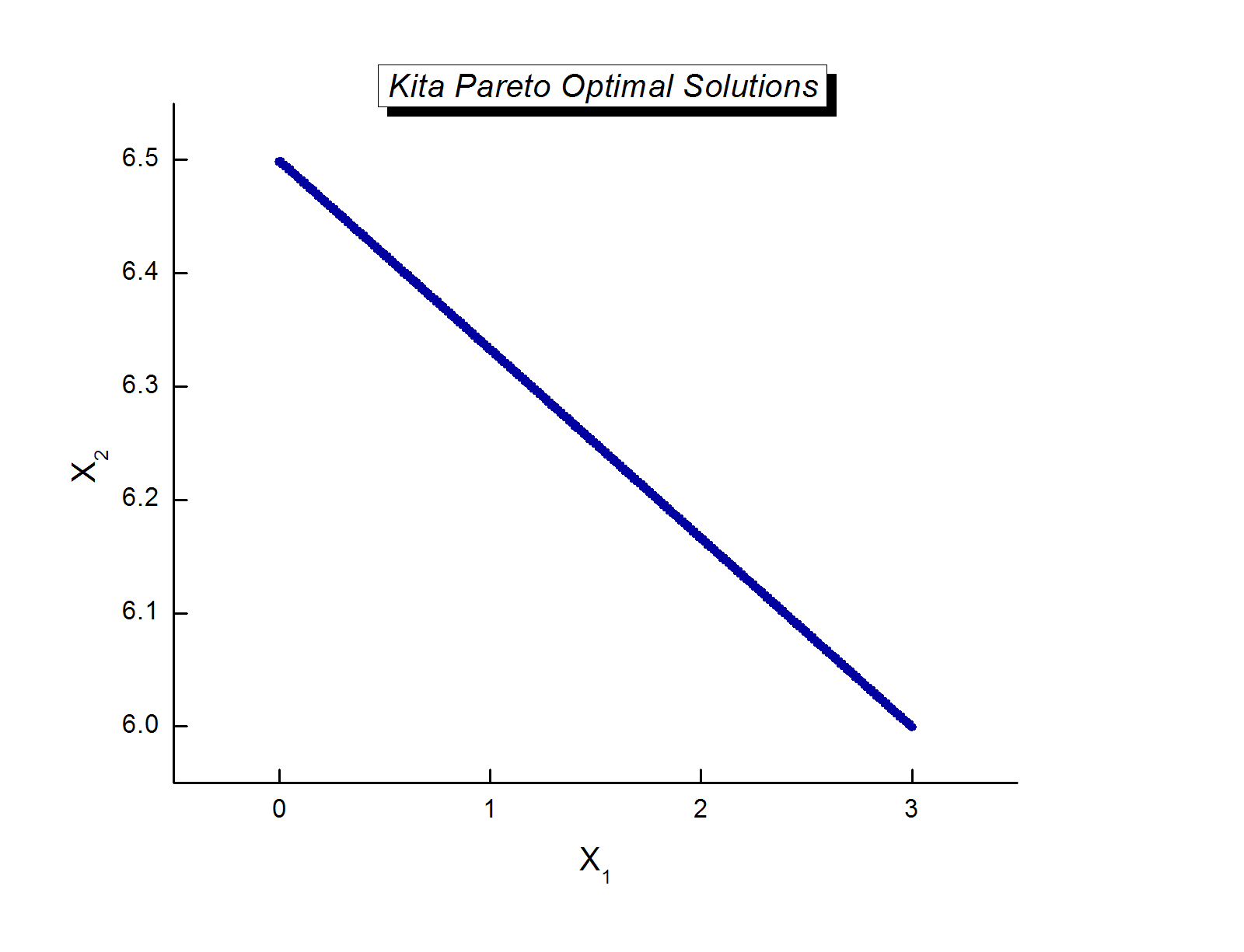

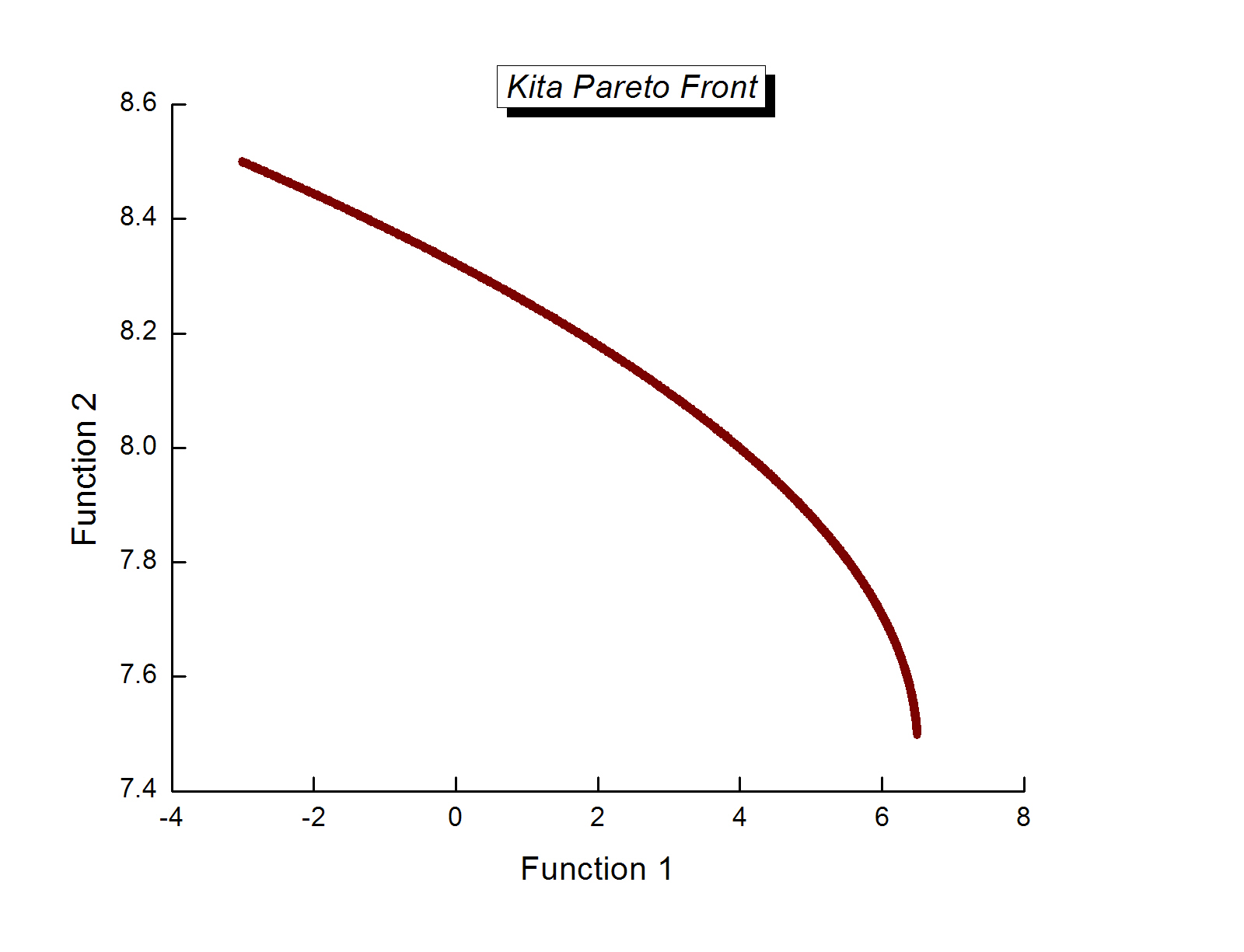

| KITA | ||||||||||||||||||||||||||||||

| Proposed by Kita

[3]; |

Maximize

|

subject

to:

|

||||||||||||||||||||||||||||

KITA Pareto Front (data file) |

||||||||||||||||||||||||||||||

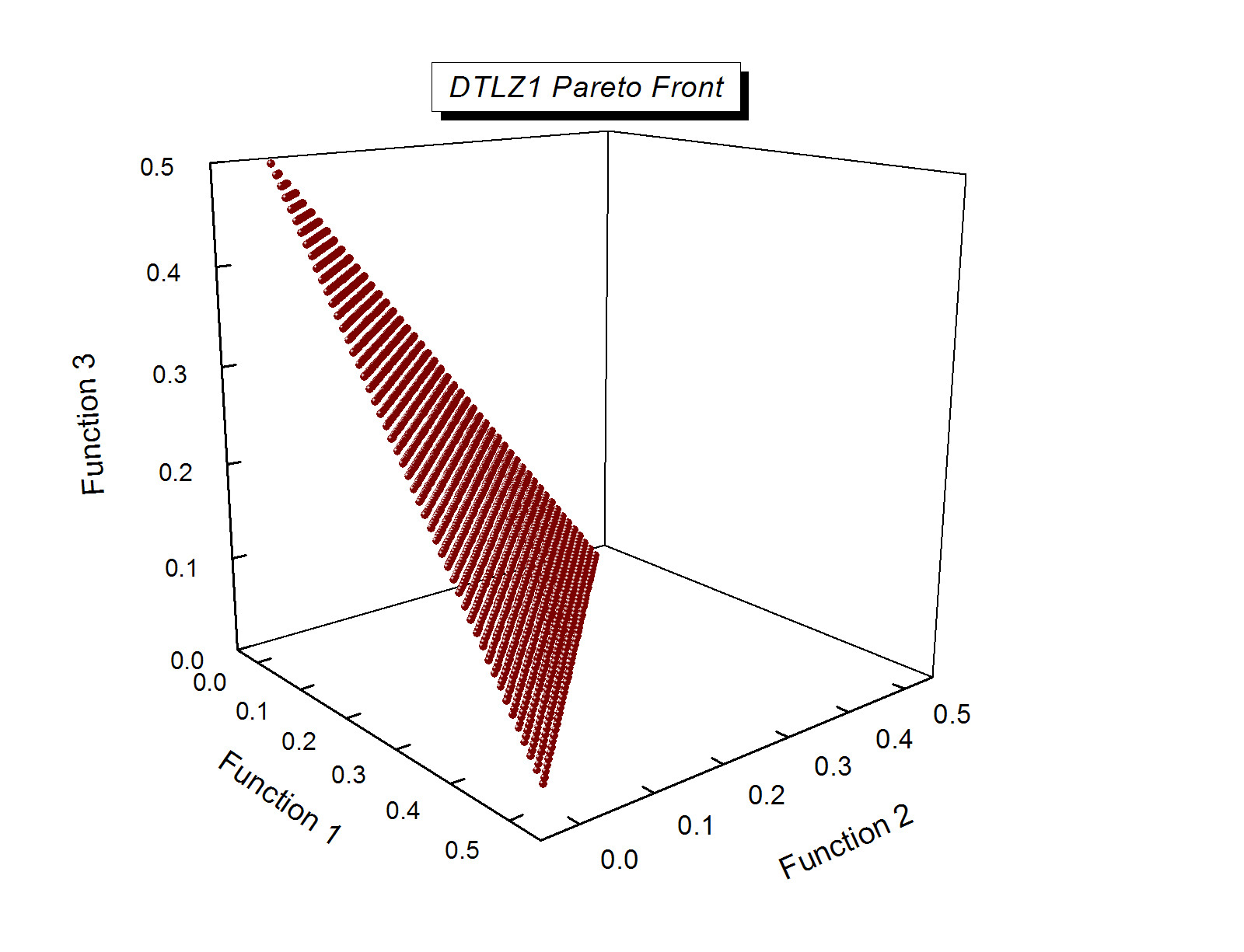

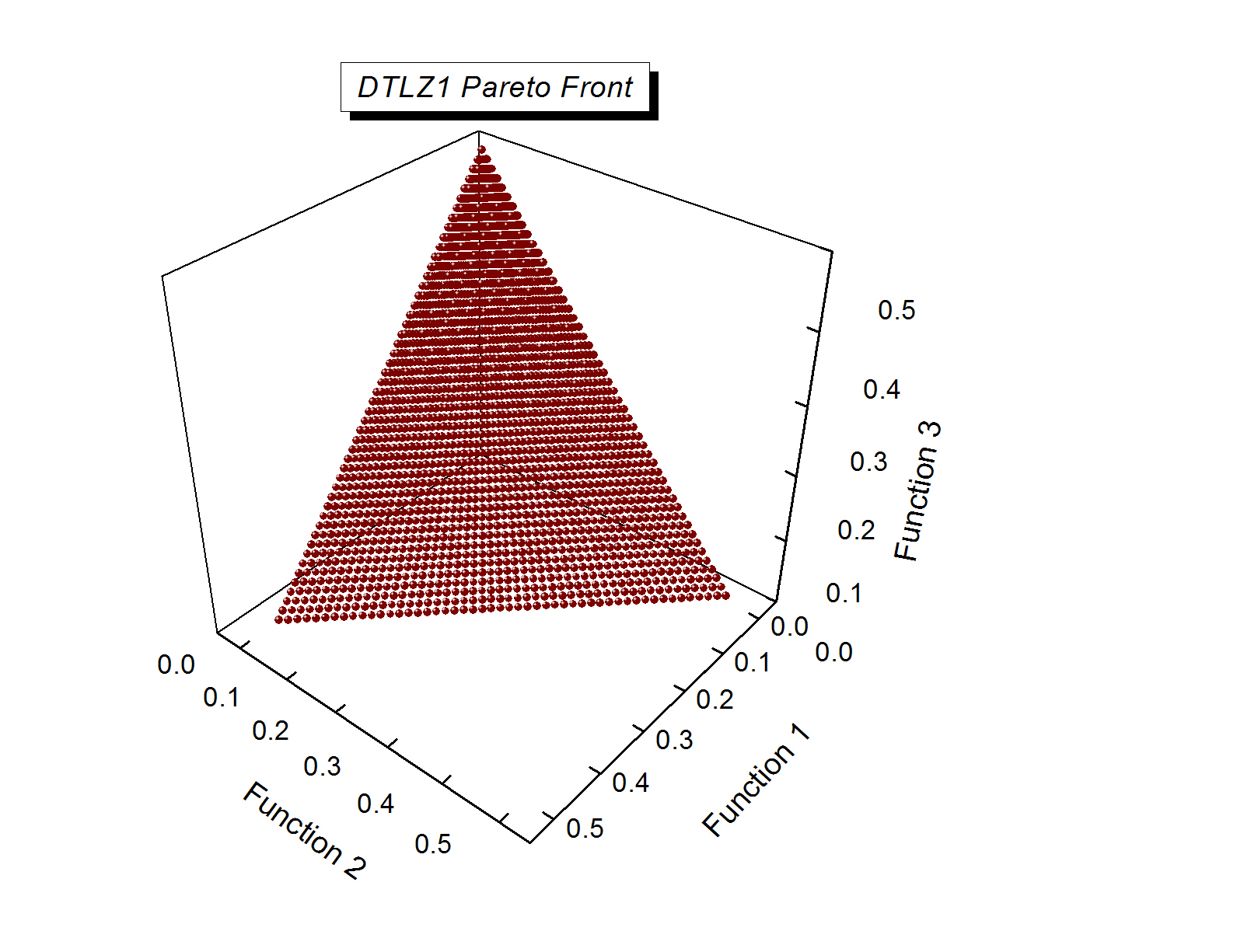

| DTLZ1 | ||||||||||||||||||||||||||||||||||||||

| Proposed by Deb et. al [2] | Minimize

|

|

||||||||||||||||||||||||||||||||||||

DTLZ1 Pareto Front (data file) |

||||||||||||||||||||||||||||||||||||||

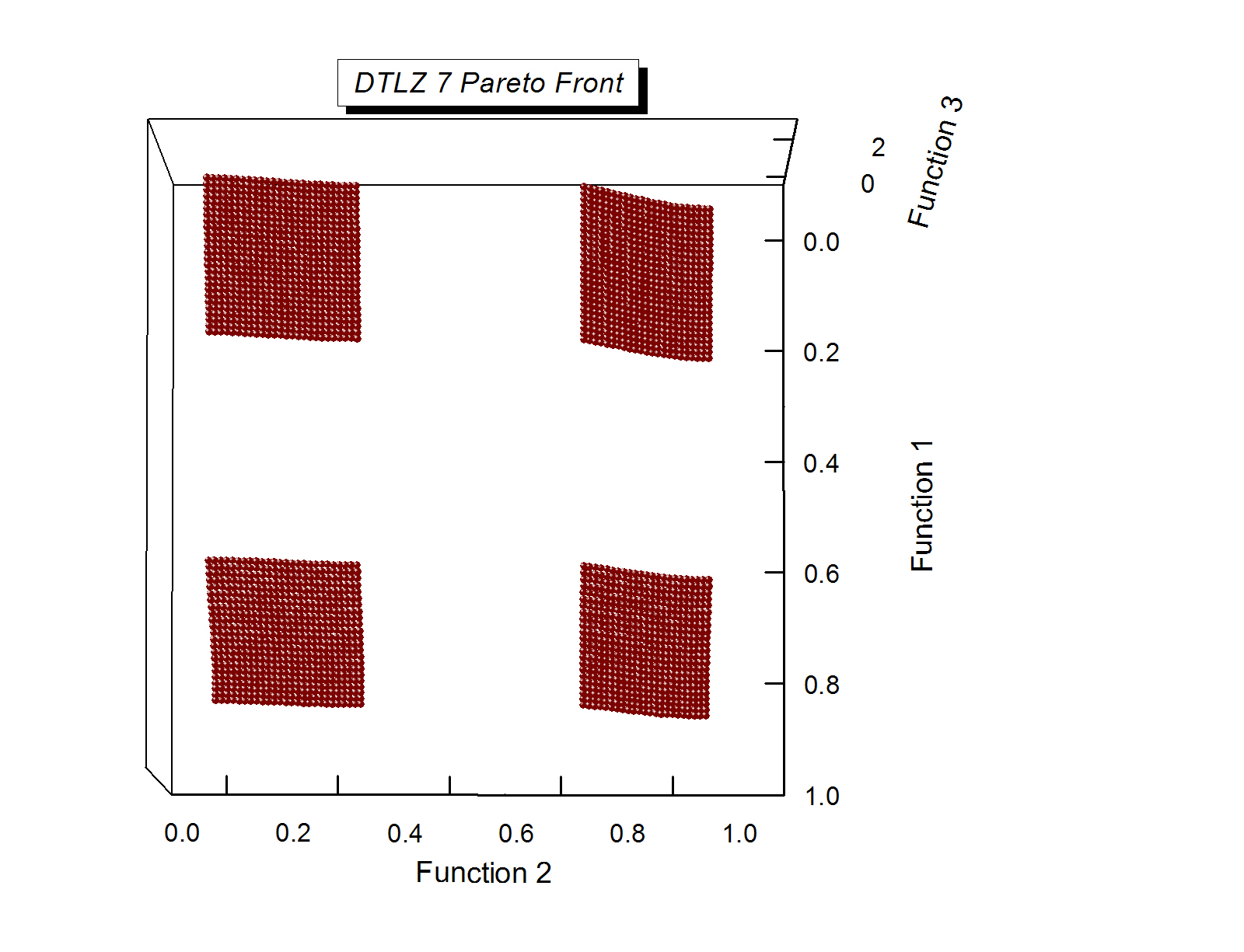

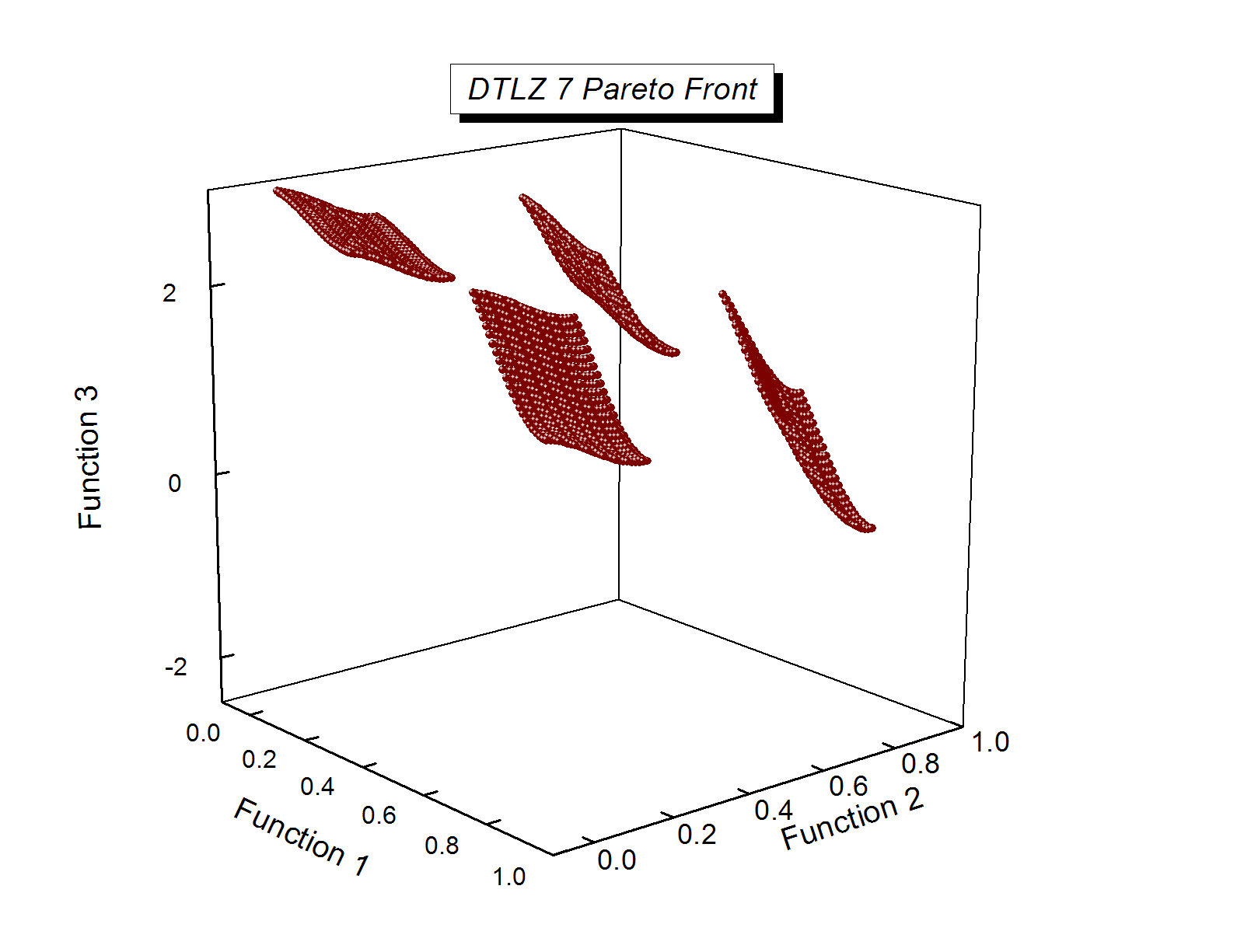

| DTLZ7 | ||||||||||||||||||||||||||||||||||||||

| Proposed by Deb et. al [2] | Minimize

|

|

||||||||||||||||||||||||||||||||||||

DTLZ7 Pareto Front (data file) |

||||||||||||||||||||||||||||||||||||||